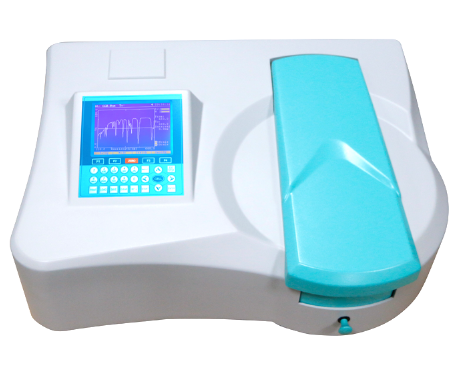

Ultraviolet-visible Spectrophotometer key performance indicators

(l) Precision The precision of an instrument such as a UV-visible Spectrophotometer is the degree of mutual consistency between the data measured by the instrument for multiple parallel measurements of the same sample. It is a quantity that characterizes the size of the random error of the instrument. According to the relevant regulations of the International Union of Pure and Applied Chemistry (IUPAC), precision is usually measured by relative standard deviation (RSD). Even the same instrument has different precision for different testing items, concentration levels, etc.

(2) Sensitivity The sensitivity of an instrument refers to the measure of the ability of the instrument to distinguish analytes with small differences in concentration. According to IUPAC, the quantitative definition of sensitivity is calibration sensitivity, which refers to the slope of the calibration curve in the measured concentration range. Many calibration curves used in analytical chemistry are linear and are typically obtained by measuring a series of standard solutions. In the analysis of spectroscopic instruments, there is a concept of sensitivity that the instrument is accustomed to use. For example, in atomic absorption spectrometry, the "characteristic concentration" is often expressed by the so-called 1% net absorption sensitivity. In atomic emission spectrometry, relative sensitivity is also often used to express the analytical sensitivity of different elements, which refers to the minimum concentration of an element in a sample that can be detected.

(3) Detection limit Under the condition that the error distribution follows a normal distribution, from a statistical point of view, the detection limit can be defined as follows: the detection limit refers to the component that can be detected with an appropriate confidence probability minimum amount or concentration. It is derived from the minimum heartbeat value.

Detection limit and sensitivity are closely related to two quantities, the higher the sensitivity, the lower the detection limit. But the righteousness of the two is different. Sensitivity refers to the magnitude of the change of the analytical signal with the content of the component. Therefore, it has a direct dependence on the magnification of the Detector, and the detection limit refers to the lowest amount or concentration that may be detected by the analytical method, which is related to the measurement noise. are directly related and have clear statistical significance. From the definition of the detection limit, it can be known that improving the measurement precision and reducing the noise can improve the detection limit.

(4) Linear range of the calibration curve The linear range refers to the range extending from the lowest concentration of quantitative determination to the concentration where the calibration curve maintains linearity. The linear range of different instruments varies greatly. For example, in atomic absorption spectrometry, it is generally only 1-2 orders of magnitude, while inductively coupled plasma atomic emission spectrometry can reach 5-6 orders of magnitude.

(5) Resolution Resolution refers to the ability of a spectral analysis instrument to distinguish between two adjacent spectral lines. The higher the resolution of the instrument, the better the instrument can separate two adjacent spectral lines without overlapping. The resolution of the instrument mainly depends on the spectroscopic system and Detector of the instrument.

(6) Selectivity The selectivity of the instrument refers to the degree to which the instrument is not interfered by other substances contained in the sample matrix. However, there may be interference from other substances in any instrument, and it is generally necessary to use a specific method to overcome or correct the interference of the instrument.

- 1Application of Flame Atomic Absorption Spectrophotometry in Detection of Food Talc Powder

- 2Talking about the performance test of epoxy resin film coated on PET

- 3Spectrophotometer principle, structure and application do you know geometry?

- 4Principle, characteristics and application of ultraviolet Spectrophotometer

- 5Spectrophotometer Color Measurement Fundamentals

- 6Differences between visible, ultraviolet-visible, infrared, fluorescent and atomic absorption Spectrophotometer s

- 7Detection of nicotine content by Spectrophotometer

- 8How to detect the color difference of fluorescent color-changing materials?

- 9Liquid color difference detection solution

-

-

-

-

-

-

YUEFENG FP6428 Flame photometer Na/Ca$ 1450.00